My personal favourites:

- wordUnit: A Document Testing Framework by Kent Beck

- Refuctoring by Jason Gorman

- Defect-full Code: Ensuring Future Income with Maintenance Contracts by Kay Pentecost

- Testing: Saving the Best for Last by Lisa Crispin

Enjoy!

Dedicated to sharing interesting news and innovations related to GE Smallworld GIS software. This blog is not related to, endorsed by or supported by General Electric.

_pragma(classify_level=debug)

_method ds_version_view.debug!all_checkpoints()

## debug!all_checkpoints() : sorted_collection

##

## returns a sorted_collection of all checkpoints in self and

## the tree beneath self sorted from most recent to oldest.

## The elements returned are actually simple_vectors of the

## form:

## {checkpoint A_CHECKPOINT,string ALTERNATIVE_NAME}

##

_local checkpoints << sorted_collection.new(_unset,

_proc(a,b)

>> a[1].time_created > b[1].time_created

_endproc)

_for a_view _over _self.actual_alternatives()

_loop

checkpoints.add_all(a_view.checkpoints.map(_proc(a_cpt)

_import a_view

>> {a_cpt,a_view.alternative_path_name()}

_endproc))

_endloop

_return checkpoints

_endmethod

$

_pragma(classify_level=debug)

_global show_all_checkpoints <<

_proc@show_all_checkpoints(a_view)

## show_all_checkpoints(ds_version_view A_VIEW) : _unset

##

## shows all checkpoints and related information for A_VIEW and

## tree.

_for cpt_info _over a_view.debug!all_checkpoints().fast_elements()

_loop

show(cpt_info[1].time_created,cpt_info[1].checkpoint_name,cpt_info[2])

_endloop

_endproc

$

MagikSF> show_all_checkpoints(gis_program_manager.cached_dataset(:gis)) $

date_time(10/30/07 17:34:44) "is!insync_1193790807" :||oracle_sync_parent| date_time(10/30/07 17:34:44) "is!insync_1193790807" :||oracle_sync_parent|oracle_sync| date_time(10/30/07 16:32:54) "is!insync_1193787116" :||oracle_sync_parent| date_time(10/30/07 16:32:53) "is!insync_1193787116" :||oracle_sync_parent|oracle_sync| date_time(10/30/07 15:33:09) "is!insync_1193783570" :||oracle_sync_parent| date_time(10/30/07 15:33:08) "is!insync_1193783570" :||oracle_sync_parent|oracle_sync| date_time(10/30/07 14:34:02) "is!insync_1193780009" :||oracle_sync_parent| date_time(10/30/07 14:34:02) "is!insync_1193780009" :||oracle_sync_parent|oracle_sync| date_time(10/30/07 13:33:52) "is!insync_1193776407" :||oracle_sync_parent|

_block

# if you want to use your GIS tools to see what the data looked

# like at a previous checkpoint, then get a handle on the

# current view and go that checkpoint in READONLY mode.

# DO NOT switch to :writable while at this alternative unless

# you are ABSOLUTELY SURE of what you are doing. The safest

# action to take once you are done viewing data at the old

# checkpoint is to switch to the "***disk version***"

# checkpoint in that alternative before continuing.

_local v << gis_program_manager.cached_dataset(:gis)

v.switch(:readonly)

v.go_to_alternative("|oracle_sync_parent",:readonly)

v.go_to_checkpoint("is!insync_1192840224",:readonly)

_endblock

$

_block

# if you want to keep the main dataset at the current view and

# then get a second handle to the old_view so that you can copy

# old data (eg., incorrectly deleted/updated data) into the

# current view, then get a handle on a REPLICA of the current

# view and go that previous checkpoint in READONLY mode.

# DO NOT switch to :writable while at this alternative unless

# you are ABSOLUTELY SURE of what you are doing. The safest

# action to take once you are done viewing data at the old

# checkpoint is to switch to the "***disk version***"

# checkpoint in that alternative before continuing.

_global old_view

# once you have finished using OLD_VIEW, be sure to :discard()

# it.

old_view << gis_program_manager.cached_dataset(:gis).replicate()

old_view.switch(:readonly)

old_view.go_to_alternative("|oracle_sync_parent",:readonly)

old_view.go_to_checkpoint("is!insync_1192840224",:readonly)

_endblock

$

record_transaction.new_update() record_transaction.new_insert() ds_collection.at() ds_collection.insert() ds_collection.clone_record() ds_collection.update() ds_collection.insert_or_update()

_pragma(classify_level=debug)

_iter _method ds_version_view.all_available_versions(_optional top?)

## yields all available Versions of a replicate of

## _self in the following order:

##

## alternatives (if TOP? isnt _false: beginning with ***top***)

## -- checkpoints (beginning with :current)

## -- base versions for checkpoints (only for alternative_level

## > 0)

##

## for each version yields

## A_VIEW,ALTERNATIVE_PATH_NAME,CHECKPOINT_NAME,BASE_LEVEL, CHECKPOINTS_TIME_CREATED

## where A_VIEW is always a replicate, which will be discarded

## outside this method. The order of the CHECKPOINTS will be in

## reversed chronological order (i.e. beginning with the most

## recent one)

##

## BASE_LEVEL is 0 for the version itself, -1 for the immediate

## base version, -2 for the base version of the base version

## and so on

##

## example:

## v,:|***top***|,:current,0,v.time_last_updated()

## v,:|***top***|,"cp_1",0,cp_1.time_created

## v,:|***top***|,"cp_2",0,cp_2.time_created

## v,:||alt1|,:current,0,v.time_last_updated()

## v,:||alt1|,:current,-1,v.time_last_updated()

## v,:||alt1|,"cp11",0,cp11.time_created

## v,:||alt1|,"cp11",-1,v.time_last_updated()

## v,:||alt2|,":current",0,v.time_last_updated()

## ...

##

## NB:

## If the different versions of _self have different

## datamodels, you might be annoyed by messages like the following:

##

## compiliing my_table.geometry_mapping

## fixing up details for dd_field_type(my_field_type) : Version 12345, transition to 54321

## Defining my_other_table

##

## To avoid this, you might want to suppress any output by

## redefining the dynamics !output! and suppressing the output

## of the information conditions db_synchronisation and

## pragma_monitor_info. Of course you have then to put any

## wanted output to other output targets like !terminal! or a

## locally defined external_text_output_stream. So your code

## might look like the following:

## MagikSF> t << proc(a_database_view)

## _handling db_synchronisation, pragma_monitor_info _with procedure

## _dynamic !output! << null_text_output_stream

##

## # ... any more preparation code ...

##

## _for r,alt_pname,cp,base_level,cp_time _over a_database_view.all_available_versions()

## _loop

##

## # ... my code running in the loop, for example:

## !terminal!.show(alt_pname,cp,base_level,cp_time)

## !terminal!.write(%newline)

## !terminal!.flush()

## # ...

##

## _endloop

##

## # ... any summary output, for example:

## !terminal!.write("Ready.",%newline)

## !terminal!.flush()

## # ...

##

## _endproc.fork_at(thread.low_background_priority)

##

## If you don't want to use these !terminal! calls, you could

## also dynamicall redefine !output!:

## MagikSF> t << proc(a_database_view)

## _handling db_synchronisation, pragma_monitor_info _with procedure

## _dynamic !output! << null_text_output_stream

##

## # ... any more preparation code ...

##

## _for r,alt_pname,cp,base_level,cp_time _over a_database_view.all_available_versions()

## _loop

## _block

## _dynamic !output! << !terminal!

## # ... my code running in the loop, for example:

## show(alt_pname,cp,base_level,cp_time)

## # ...

## _endblock

## _endloop

##

## !output! << !terminal!

## # ... any summary output, for example:

## write("Ready.")

##

## _endproc.fork_at(thread.low_background_priority)

_local change_alt_level <<

_if top? _isnt _false

_then

>> - _self.alternative_level

_else

>> 0

_endif

_local r << _self.replicate(change_alt_level,_true)

_protect

_for rr _over r.actual_alternatives(:same_view?,_true)

_loop

_local alt_name << rr.alternative_path_name()

# _if alt_name.write_string.matches?("*{consistency_validation}") _then _continue _endif

_local cps << rr.checkpoints.as_sorted_collection(_proc(lhs,rhs)

_if lhs _is :current

_then

_if rhs _is :current

_then

_return _maybe

_else

_return _true

_endif

_elif rhs _is :current

_then

_return _false

_endif

_local res << rhs.time_created _cf lhs.time_created

_if res _isnt _maybe

_then

_return res

_endif

_return lhs.checkpoint_name _cf rhs.checkpoint_name

_endproc)

cps.add(:current)

_for cp _over cps.fast_elements()

_loop

_local cp_name

_local cp_time

_if cp _is :current

_then

rr.rollforward()

cp_name << :current

cp_time << rr.time_last_updated()

_else

cp_name << cp.checkpoint_name

rr.go_to_checkpoint(cp_name)

cp_time << cp.time_created

_endif

_loopbody(rr,alt_name,cp_name,0,cp_time)

_if rr.alternative_level > 0

_then

_local rrr << rr.replicate(:base,_true)

_protect

_local level_diff << -1

_loopbody(rrr,alt_name,cp_name,level_diff,rrr.time_last_updated())

_loop

_if rrr.alternative_level = 0 _then _leave _endif

rrr.up_base()

level_diff -<< 1

_loopbody(rrr,alt_name,cp_name,level_diff,rrr.time_last_updated())

_endloop

_protection

rrr.discard()

_endprotect

_endif

_endloop

_endloop

_protection

r.discard()

_endprotect

_endmethod

$

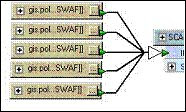

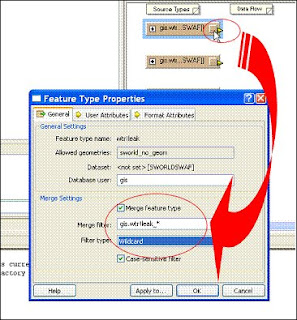

I used the Workbench for the first time to create an fmw file for exporting objects. I used the Scale Transformer which appears to work. Is this the right approach or should I create an FME coordinate system that matches our Smallworld database coordinate system? This must be a common problem for Smallworld/FME users.

FACTORY_DEF SWORLD SamplingFactory \

INPUT FEATURE_TYPE * \

@Scale(0.001) \

SAMPLE_RATE 1

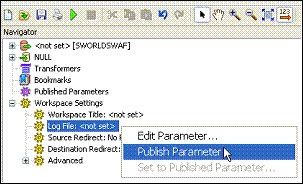

Answer: If all of your export collections are processed by the same FMW file (which I see it is; which I also think is a good thing) then I do not see a reason to have to put the code in a loop.

_block

_global fme_tics_server

_local current_server

# start the fme_tics_server if not already running

_if (current_server << fme_tics_server.current_server) _is _unset _orif

_not current_server.acp_running?

_then

fme_tics_server.start(_unset,_unset,:gis)

current_server << fme_tics_server.current_server

_endif

# indicate which records to prepare for export

_local vc << gis_program_manager.cached_dataset(:gis).collections

_local export_records << rwo_set.new_from(vc[:table1])

export_records.add_all(vc[:table2])

export_records.add_all(vc[:table3])

current_server.set_output_rwo_set(export_records)

# run the command line FME

_local command << {"\\gis\FME\fme","\\server\mapping\FME\sworld2mapinfo.fmw",

"--DestDataset_MAPINFO",'"\\server\mapping\FME\fme2007"',

"--PORT_SWORLDSWAF", current_server.port_number.write_string}

system.do_command(command)

_endblock

$

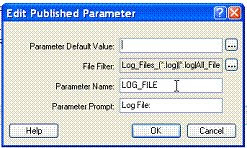

_local command << {"\\gis\FME\fme","\\server\mapping\FME\sworld2mapinfo.fmw",

"--DestDataset_MAPINFO",'"\\server\mapping\FME\fme2007"',

"--PORT_SWORLDSWAF", current_server.port_number.write_string,

"--LOG_FILE","\\server\mapping\FME\fme2007\mapinfo.log"}

MagikSF> smallworld_product.applications.an_element().plugin(:map_plugin).debug!source_files

$

sw:rope:[1-2]

MagikSF> print(!)

$

rope(1,2):

1 ".....\sw_user_custom\pni_gui\resources\base\data\config.xml"

2 ".....\sw_user_custom\custom_application\resources\base\data\config.xml"